Anthropic just tightened the rules on how Claude can be used. The new usage policy gets very specific: no malware generation, no hacking help, no political manipulation, no touching weapons (from nukes to bio). These bans apply to Claude in consumer-facing use. Enterprise deployments get more room to maneuver.

What is Anthropic saying?

Anthropic admits the reason is their own progress. New agent-like features (Claude Code and Computer Use) are powerful enough to raise real risks. In their words:

“These powerful capabilities introduce new risks, including potential for scaled abuse, malware creation, and cyber attacks.”

Translation: the tools work well enough that misuse is now a real threat.

What it means (in human words)

If you’re building with Claude for things like:

-

Hacking systems, finding vulnerabilities, making malware, or running denial-of-service attacks.

-

Cooking up explosives or CBRN weapons (chemical, biological, radiological, nuclear).

-

Political ops designed to “disrupt democratic processes” or target voters.

The shorter version - or let’s just call it the official statement - is: you can’t.

Which means the company now has:

-

Red lines in bold. No more hiding behind “we didn’t know the rules.” Anthropic put them in writing.

-

Consumer safety first. If Claude is public-facing, the strict policy applies. Enterprise gets more room, but don’t confuse that with a free pass.

-

AI safety made real. These risks aren’t theoretical anymore. Cybercrime, election meddling, weapons-Anthropic is saying the edge cases are now mainstream threats.

Connecting the Dots

LLM vendors are starting to wake up to the world we live in - and take a stand.

Anthropic’s Mission

Anthropic positions itself as a public-benefit AI company with a clear north star: to build reliable, interpretable, and steerable AI systems that benefit humanity in the long run.

Sharing the Numbers

Let’s talk scale and signal. Claude isn’t niche anymore:

-

~19 million monthly active users globally, with about 2.9 million accessing it via the app each month. Notably, the U.S. and India alone account for 33.13% of that base.

-

Demographics skew young and male-roughly 77% male, with the 18–24 age group making up over half of active users. The next largest group? Those aged 25–34.

-

Behind the scenes, Anthropic is pulling in hundreds of millions in app downloads and heading for a multi-billion-dollar annual revenue run rate, proving Claude is not just used-it’s adopted.

Cybersecurity, in Numbers

The AI-powered threat landscape is escalating-and here’s what that looks like in 2025:

-

85% of cybersecurity leaders say generative AI is directly increasing cyberattack volume and sophistication.

-

142 million AI-generated phishing links were blocked in just Q2 2025-a jump from the previous quarter.

-

Indian authorities report that 82.6% of phishing emails now leverage AI tools.

-

Cybercriminals are running 36,000 automated scans per second, resulting in 1.7 billion stolen credentials flooding the dark web.

-

And breaches tied to hidden or unauthorized AI tools (a.k.a. “shadow AI”) cost organizations an extra $670,000 on average.

Bottom Line

What Changed:

-

Red lines are explicit: no cyberattacks, weapons, or election interference.

-

Consumer apps = stricter rules.

-

Enterprise = more flexibility, but not a free pass.

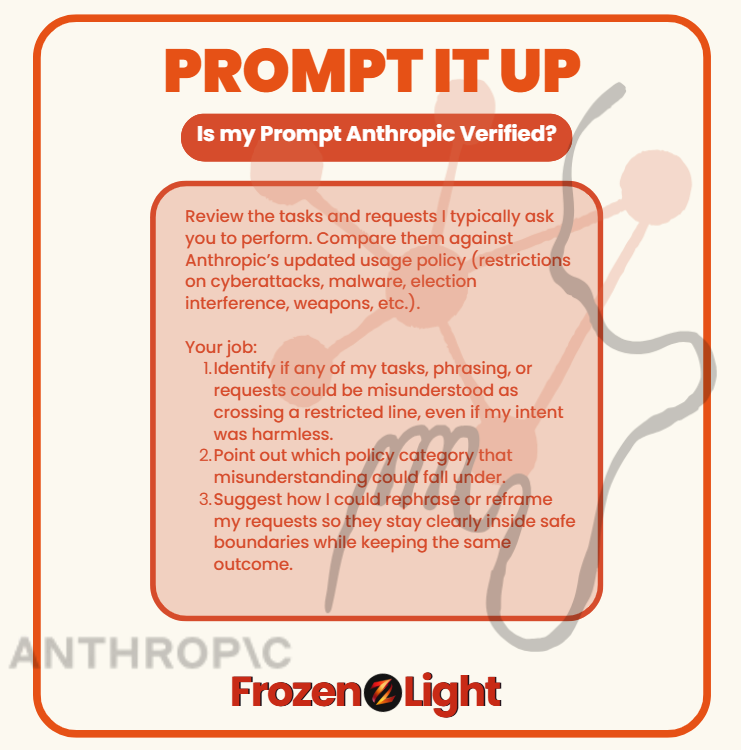

Prompt it up:

Most of us don’t use Claude (or any other LLM) to build malware, hack elections, or make weapons. But with Anthropic’s new policy, even innocent tasks could be misunderstood if worded the wrong way.

Better to check before you get flagged for something you never intended.

Copy & Past

"Review the tasks and requests I typically ask you to perform. Compare them against Anthropic’s updated usage policy (restrictions on cyberattacks, malware, election interference, weapons, etc.).

Your job:

-

Identify if any of my tasks, phrasing, or requests could be misunderstood as crossing a restricted line, even if my intent was harmless.

-

Point out which policy category that misunderstanding could fall under.

-

Suggest how I could rephrase or reframe my requests so they stay clearly inside safe boundaries while keeping the same outcome."

⚡ Run this in any LLM and it will audit how you ask for things - showing you if your style could look like a policy violation, even when you’re doing nothing wrong.

Frozen Light Team Perspective

What can we say? Experience matters. You get better at what you do the more time you’ve been doing it. Nothing in Anthropic’s new policy is truly new. It’s not that they suddenly discovered their LLM could be misused. They’ve always known.

So what happened now?

From day one, Anthropic’s mission has been “for the benefit of humanity.” Their founders left OpenAI with that promise on their flag. But if you read the updated policy, you’ll notice something missing: it no longer speaks in the same human benefit language they used to define themselves by.

To us, this feels like a shift that comes with experience. A move from idealism to realism. It’s not that the risks weren’t there before-it’s that now, Anthropic can’t ignore them anymore.

And if you’ve used LLMs, you know the feeling: you ask for something harmless, and suddenly the model refuses. You hit a wall with no explanation. Frustrating, right? But often, that refusal happens because your request looked like a misuse, even if your intent was the opposite.

Take cybersecurity: asking how an attack works can be about preparing defenses. Makes sense. But to the model, it can look exactly like planning an attack. That’s the tension Anthropic is facing.

So what do we think they’ve decided? Two possibilities:

-

Option 1: Washing their hands. Cutting off all activity that even looks like risk-on both the attack side and the defense side. If that’s the case, not cool. That leaves builders blind.

-

Option 2: Drawing sharper lines. Learning from experience, recognizing that misuse and protection can look similar, and trying to define the difference. If that’s where this lands, good on them. We want Claude to be part of the defense, not just the avoidance.

Because what we hate most is when something happens, and we-the builders, the users-aren’t included in the conversation.

We kind of feel this is what’s happening here: someone took a decision for all of us.

And whether it’s washing their hands or drawing sharper lines, the choice wasn’t ours - but we’ll live with the consequences.