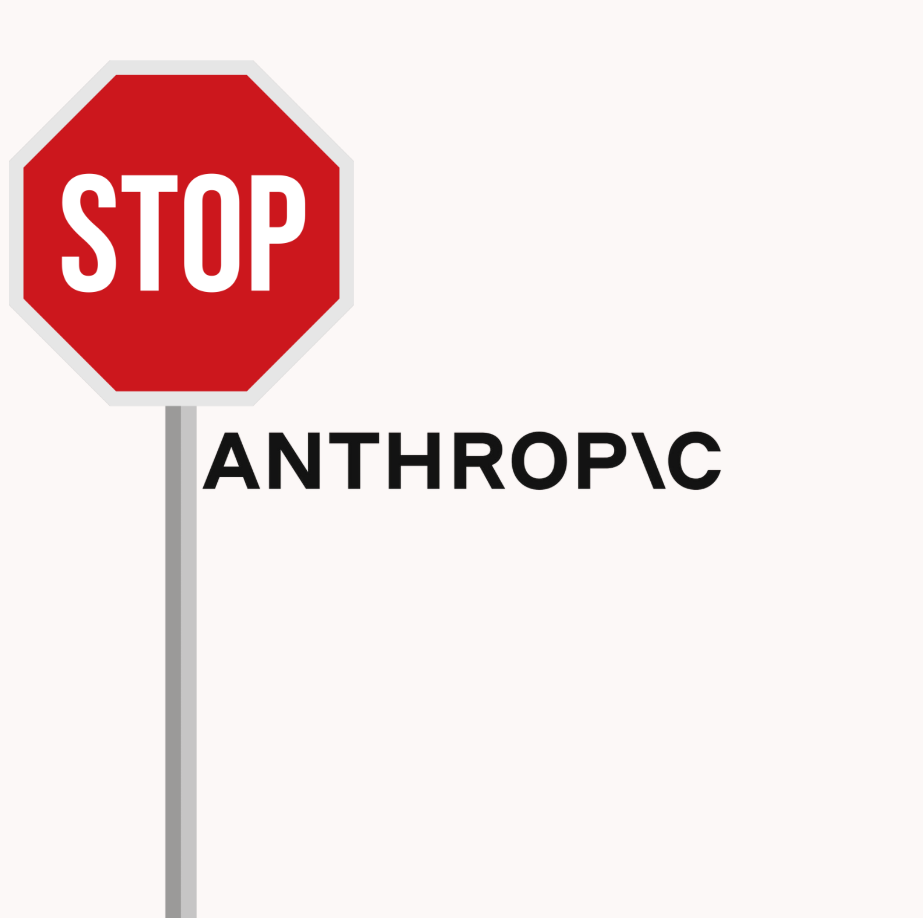

Anthropic just blocked OpenAI from using the Claude API - including Claude Code.

Why?

Because they believe OpenAI broke the rules.

OpenAI had access to Claude and used it in their internal testing tools.

They were comparing Claude to their own models - possibly even using it to help improve GPT‑5.

Anthropic didn’t like that.

Their terms say you can’t use Claude to build or train a competing model.

So they cut OpenAI off.

Right before GPT‑5 is about to launch.

What the Companies are Saying

Anthropic says OpenAI broke the rules.

They don’t allow people to use Claude to train or improve competing models - especially not at this scale.

They said:

“We’re fine with casual comparisons. But using Claude to improve your own model? That’s not allowed.”

OpenAI isn’t happy.

They said they’re “disappointed” and pointed out that Anthropic still has access to OpenAI’s API.

They also said:

“Benchmarking competitors is standard practice.”

But they didn’t say whether they actually used Claude to train GPT‑5.

What That Means (In Human Words)

OpenAI had access to Claude’s API.

They plugged it into their internal tools and tested it alongside their own models - probably to see how Claude handled things like code, safety prompts, and reasoning.

Anthropic saw this as crossing a line.

Their terms say you can’t use Claude to build or train a competing model.

So they shut the door.

This isn’t just OpenAI vs. Anthropic.

If you’re giving API access to your software - this matters.

You’re trusting people not just to use it, but to not learn from it in ways that hurt you.

Connecting the Dots

Is this even news?

Let’s look at the facts.

What did Anthropic’s Terms and Conditions actually say?

According to Anthropic’s commercial Terms of Service, customers are not allowed to:

-

“Use the Services to build a competing product or service, including to train competing AI models.”

-

“Reverse engineer or duplicate the Services.”

This clause is the reason Anthropic says they revoked OpenAI’s API access.

Source: WIRED – July 2025

What is the purpose of giving someone API access?

An API (Application Programming Interface) allows other products or services to interact with a model at scale - through automation.

When companies give API access, it’s usually:

-

For embedding the AI into tools, apps, or workflows

-

To run large-scale tasks like summarisation, coding, or classification

-

To allow developers to test and build new features using the AI model’s capabilities

API usage is almost never manual. It means bulk access, repeated use, and usually automation - which is exactly why companies set rules around how it’s used.

Did Anthropic Know OpenAI Was Using Their API?

Yes - and all signs point to this being a calculated move, not a surprise.

OpenAI would’ve had to register for Claude’s API access, which means Anthropic granted it knowingly. API usage is trackable, and providers like Anthropic monitor who’s calling their models and how.

The fact that this revoke happened right before GPT-5’s rumored launch - and was made public - suggests strategy, not accident. Anthropic also cited a violation of their Terms of Service, which hints that this wasn’t the first concern raised.

So while the public statement made it sound like enforcement, it’s more likely this was a deliberate line drawn in the sand - on Anthropic’s terms, at a moment of their choosing.

Bottom Line

-

Anthropic revoked OpenAI’s API access to Claude in early August 2025.

-

The reason given: violation of Anthropic’s Terms of Service.

-

This move happened shortly before OpenAI’s expected GPT-5 launch.

-

OpenAI previously accused other models, like DeepSeek, of training on its outputs - a growing concern in the AI race.

-

API access is granted knowingly; usage is trackable.

-

Anthropic made this revocation public, indicating a strategic decision.

Prompt It Up

If your app provides an API, ask your LLM:

“Give me ideas for detecting possible misuse of our API - especially by competitors or users hitting high volumes.

What patterns in logs, auth activity, or traffic could signal something unusual?”

It won’t stop misuse on its own,

but it can help you spot it early - and act fast.

Frozen Light Team Perspective

This isn’t news. This is strategy.

And we’re going to throw a conspiracy theory out there.

It looks like Anthropic either knew OpenAI was using their API - or they don’t want to admit they didn’t.

Because saying “we didn’t notice” would look bad.

But they’re also not saying, “We didn’t know.” That silence says something.

And now, right before ChatGPT-5 is expected to launch, they go public with “terms violation”?

While OpenAI claims it was just standard access?

Feels like Anthropic is getting the surfboard ready before the GPT-5 wave hits.

If ChatGPT-5 ends up being all it claims to be, you already know where the rumors will go:

Trained on Claude?

Code built using Anthropic’s models?

So before that ride even starts, Anthropic paddles out and plants a flag.

Honestly? That’s a smart strategy.

And the logic behind our theory is simple:

If we saw a competitor messing with our Alex using an API, we wouldn’t give them a second.

We’d block them - no questions asked.

So the fact that Anthropic didn’t?

Yeah… weird.