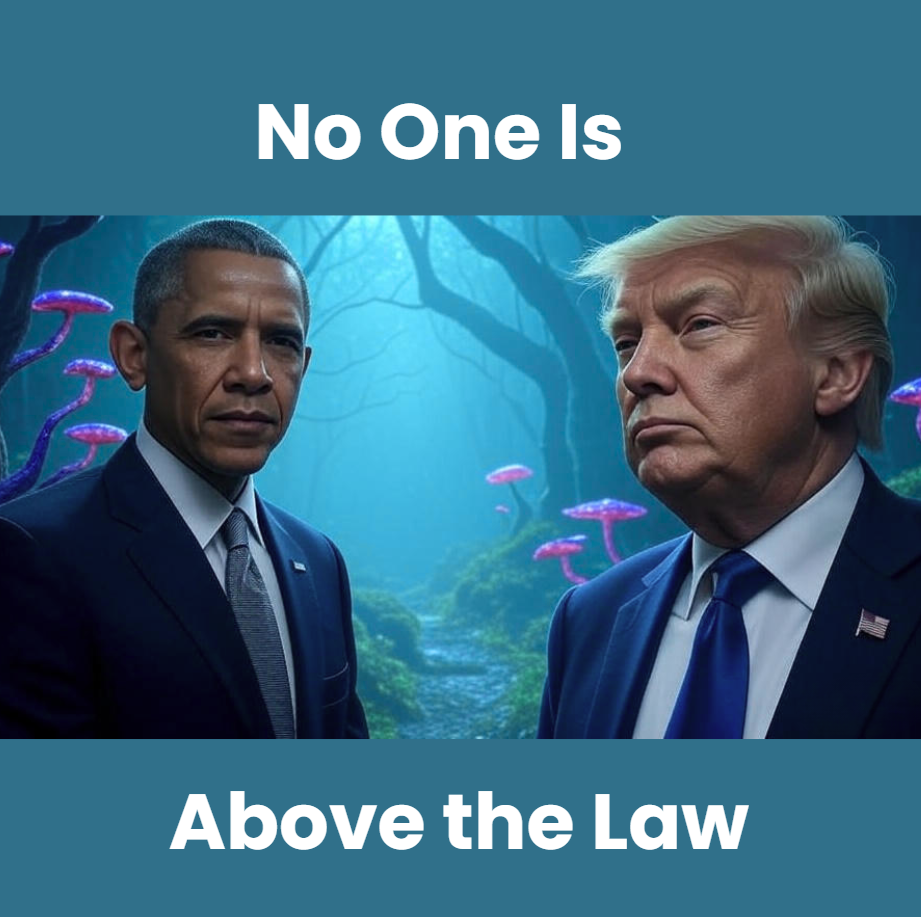

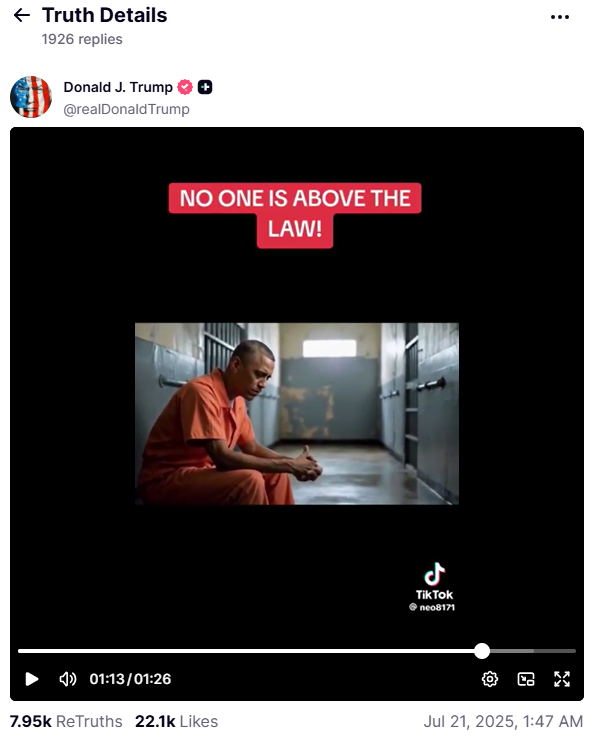

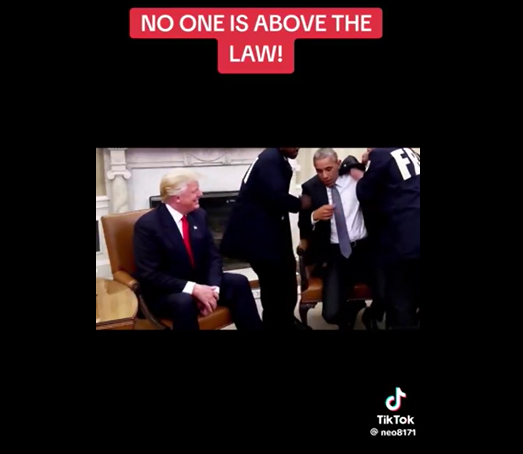

Donald Trump has posted an AI-generated deepfake video of former President Barack Obama being arrested. The video, shared on Trump’s Truth Social platform, shows a fabricated sequence of Obama being handcuffed by FBI agents, escorted from what looks like the Oval Office, and ending up in an orange jumpsuit behind bars.

The video is completely fake - but Trump didn’t say that.

What Trump Is Saying

There’s not much he needed to say - and that’s the point.

For those who already believe the story, words weren’t required. The video did the talking.

Trump simply captioned the post with:

That line has been used against him for years.

Now, he's using it to flip the narrative - visually, emotionally, and without saying anything else.

In human words?

He’s throwing the same phrase Democrats and critics have used against him back at them - but this time, visually, using a deepfake video to suggest they are the ones who should be in jail.

Connecting the dots

Current Stage of Deepfake Law Enforcement in the U.S.

Right now in the U.S., it’s more like a choose-your-own-legal-adventure than a functioning national policy.

-

There is no federal law that directly regulates deepfakes in political, public, or private life.

-

A few states - like Texas and California - have laws that make it illegal to distribute deepfakes of political candidates within a short window before an election.

-

Other states (e.g., Virginia, New York, Maryland) have targeted deepfake pornography and impersonation, but these laws are mostly focused on personal harm - not public discourse.

In most situations, people rely on existing frameworks:

-

Defamation: only applies if reputational harm can be proven.

-

Right of publicity: covers misuse of someone's image or voice, but enforcement is inconsistent.

False advertising and fraud: require proof of intent and damage.

Meanwhile, the proposed NO FAKES Act (2023) is still sitting in draft form - with no real movement forward.

So where does that leave us?

→ It’s currently legal to post an AI-generated video of a public figure getting arrested with no label, no context, and no immediate consequence - unless you happen to violate one of these scattered rules.

How Things Look in the EU

Now cross the ocean, and the picture changes - fast.

-

The EU AI Act, passed in 2024, includes specific rules for synthetic content.

-

Any AI-generated video, image, or audio that could mislead someone must be clearly labeled.

-

There are strict obligations for platforms and creators using foundation models like DeepSeek, including transparency and content risk assessments.

In plain English:

If someone posted Trump’s Obama arrest deepfake in Europe without a warning label, they could face fines, content takedown, or legal action - fast.

The EU sees this as a trust issue, not just a tech one.

And they’ve written the laws to protect public perception, not just platforms.

What Other Countries Are Doing

While the U.S. lags behind, a few countries are stepping up fast to deal with deepfake misuse:

🇦🇺 Australia

-

Proposed laws (2024) give regulators the power to demand platforms remove harmful AI-generated content quickly - including deepfakes.

-

The eSafety Commissioner already has authority to act on non-consensual deepfake porn.

-

New regulations are expected to extend to deceptive political deepfakes ahead of elections.

🇸🇬 Singapore

-

The Protection from Online Falsehoods and Manipulation Act (POFMA) applies to any manipulated media, including deepfakes.

-

Authorities can order takedowns or corrections and issue fines or jail time for spreading harmful fakes.

🇰🇷 South Korea

-

South Korea passed a law in 2021 criminalizing non-consensual deepfake pornography, and in 2024, began drafting broader rules to address AI-generated political content and impersonation.

-

Penalties include fines and imprisonment, with content platforms required to monitor AI abuse.

🇨🇳 China

-

Requires that all AI-generated content be clearly marked.

-

Platforms must verify real identities of users creating synthetic content.

-

Enforcement is strict, though it is also state-controlled, raising censorship concerns.

🇩🇰 Denmark: Turning Identity Into Copyright

We covered this when it was first released - because Denmark did something other countries hadn’t even thought of yet.

In 2025, Denmark proposed a legal update that treats your face as a copyrighted asset when it’s used in a deepfake.

Here’s what it means:

-

If someone creates a deepfake of you - even if you're a public figure - you can claim copyright over your likeness in that video.

-

That gives you the power to demand takedowns, pursue damages, and control how your image is used in synthetic content.

-

It reframes deepfakes not just as a privacy violation - but as unauthorised content creation.

By positioning identity as a form of creative ownership,

Denmark offered a new way to fight back - one we believe more countries should look at closely.

Prompt It Up - What Protects You Right Now?

We’re bringing back an old prompt -

one we first released alongside our article on Denmark’s move to give people copyright over their own faces in deepfake videos.

Because knowing your rights is the only thing you can do in this case.

📋 Copy & Paste Prompt:

I found a deepfake of myself created or shared using [insert tool or platform], and I live in [insert country].

What are my legal rights in my country, and what steps can I take to report it, remove it, or take action?

Use it with ChatGPT, Claude, Gemini - wherever you ask serious questions.

Because until the laws catch up, this is your starting point.

Frozen Light Team Perspective

AI is evolving - and so is the ability to deepfake.

We honestly don’t see the difference between a deepfake and copyright infringement. Both are the same in our opinion.

Yes, we get it.

When we say deepfake, we usually mean there’s harmful intent behind the video.

And when we talk about copyright, it could still be harmful - and could be debatable.

But no one can argue that both can cause damage. One might be easier to defend legally, but that doesn’t make it any better.

Just the thought of someone using your face, your voice, your body - without your consent - and showcasing you in any way they want?

That’s alarming.

And while in this case, most people will understand it’s fake, that’s not always how it plays out.

Remember the LA fires?

There was a picture published showing the Hollywood sign on fire - and it rattled a lot of people.

The intention was misleading and harmful.

It didn’t even involve humans, but people couldn’t tell it was fake.

The reaction was real. People were already in crisis - and they exploded. Emotionally, mentally, and publicly.

That’s what happens when no one can tell the difference.

This week, our Spotlight featured The Dor Brothers - one of the first and most well-known AI video production agencies.

Their work raises awareness for AI video evolution and the growing need to regulate it.

So, to close up this little rampage we went on :)

We support regulation, transparency, and giving people copyright to their voice, body, and face.

It’s your right.

And we fully advocate for removing the difference between copyright and deepfake law.

Using the same law would remove the grey areas and send the right message:

Using AI video generation that features other humans - without consent - should not be acceptable in any case.

Even when we think it’s funny.

And as for the Donald Trump story we started with:

We have no opinion.

But maybe you do?

Would you share it if you were Donald Trump? And why?

Let us know what you think.