TL;DR

AI tools don't cheat - people cheat. We're having the exact same moral panic about AI that we had about calculators, spell-checkers, and the internet, and it's just as ridiculous now as it was then.

AI becomes "cheating" when: You use it to dodge learning, skip thinking entirely, or pretend you're smarter than you actually are. Basically, when you're trying to get credit for work you didn't do or understanding you don't have.

AI is perfectly fine when: You use it to supercharge your thinking, blast through barriers, and create better work through actual human-AI collaboration. The secret sauce is that you still understand, evaluate, and own the final output.

Four reality-check questions: What are you actually supposed to accomplish? Are you lying about AI's role? Did your brain engage with the output? Does this match what people expect in your situation?

Bottom line: The future belongs to people who master human-AI collaboration while keeping their critical thinking skills sharp. Stop freaking out about the tool and start getting good at using it like a professional.

Let me start with something that should be blindingly obvious but somehow isn't: AI tools don't cheat. People cheat. Tools are just tools, and losing your mind over this distinction is like blaming hammers for crooked nails.

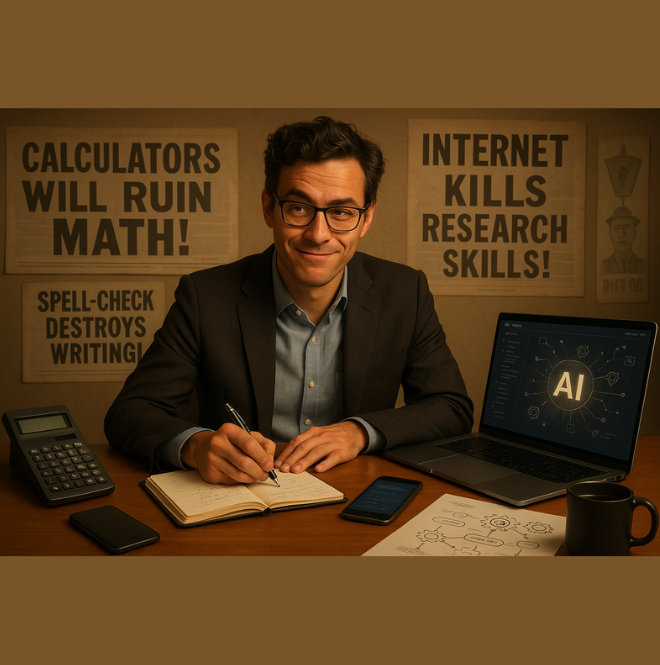

After working with automation tools and AI, I'm watching this "is AI cheating?" hysteria with the same frustration I felt watching people panic about calculators, spell-checkers, and the internet. We never learn, do we?

Here's what's actually happening - using AI becomes cheating when you use it to completely avoid thinking, learning, or doing the work you're supposed to be doing. But using AI to think better, learn faster, or produce higher-quality work? That's called "not being an idiot about available resources."

We've Been Here Before (And We're Still Terrible at Learning From History)

When calculators showed up in classrooms in the 1970s, educators completely lost their minds. "Students won't learn basic math!" they shrieked. When spell-checkers became standard, critics predicted the death of proper writing. When the internet made information accessible, people were convinced it would destroy research skills.

Here's what actually happened every time: We figured out how to use these tools appropriately, they made us dramatically more capable, and the doomsday predictions turned out to be complete nonsense.

I've built automation systems that handle mind-numbing routine tasks so people can focus on creative problem-solving. Nobody calls that "cheating" - they call it "brilliant efficiency." The exact same principle applies to AI, but somehow we've convinced ourselves this time is different.

It's not different. We're just being dramatic again.

How to Tell the Difference Between Using AI and Being a Lazy Fraud

After years of building AI-powered automation systems, I've developed a foolproof framework. Four brutally simple questions:

What Are You Actually Supposed to Be Accomplishing Here?

If the point is learning something or demonstrating your understanding, then using AI to bypass that learning is obviously cheating. If you're solving a real problem or getting legitimate work done efficiently, then AI is just another tool.

Simple test: Can you explain and defend the AI-generated output like you actually understand it? If not, you're using AI as a crutch, not a tool.

Are You Being Honest About What Actually Happened?

Don't lie about your process. If AI played a major role, acknowledge it when relevant. I've watched people pass off AI-generated work as their own brilliant thinking - the moment someone asks follow-up questions, they're completely exposed as frauds.

Did Your Brain Actually Engage With the Output?

AI outputs are frequently plausible-sounding but factually wrong or contextually inappropriate. If you're not critically evaluating and refining AI content, you're just being a mindless conduit for potentially garbage information.

I use AI tools constantly, but I never accept their output without verification. The final decisions and quality control are always mine.

Does This Match What People Expect in Your Situation?

Different contexts have different standards. What's fine for business proposals might be inappropriate for academic papers. This requires basic professional awareness, not a philosophy degree.

Real Examples: Smart Use vs. Just Cheating

Academic cheating: Student asks ChatGPT to write an entire essay and submits it as original work.

Academic legitimacy: Student uses AI to brainstorm arguments and get feedback, then develops ideas with their own analysis.

Professional stupidity: Using AI to generate client reports without understanding the analysis or being able to answer questions.

Professional competence: Using AI to draft proposals, then applying your expertise to refine and validate results.

The difference is whether the human actually engaged with the material and contributed meaningful work.

What This Means for the Future

For Educators

Stop trying to ban AI (impossible and irresponsible) and redesign assessments to emphasize skills AI lacks - critical thinking, creativity, and complex judgment. The smart educators now teach students how to use AI effectively rather than pretending it doesn't exist.

For Professionals

Develop clear policies focused on outcomes and accountability, not technological fear. Ensure AI enhances human judgment rather than replacing it. Train people on responsible use and maintain human accountability for decisions.

For Everyone

Learn to collaborate with AI while maintaining critical thinking skills. The people avoiding AI will be left behind. The people relying on it uncritically will produce garbage. The people mastering thoughtful collaboration will have massive advantages.

Bottom Line: Stop Overthinking This

The ethical question isn't whether you use AI - it's whether you use it responsibly and honestly.

AI becomes "cheating" when you use it to avoid learning or thinking. AI becomes legitimate when you use it to enhance your capabilities through genuine human-AI collaboration.

I've been using AI tools for years - they're incredibly powerful when used thoughtfully and potentially disastrous when used carelessly. The difference isn't the tool, it's the intelligence and integrity of the human using it.

The goal should be becoming more capable humans who leverage AI effectively, not AI-phobic luddites or AI-dependent zombies who can't think without algorithmic assistance.

If you can't tell the difference between using a tool to enhance your work versus completely avoiding work, the problem isn't AI - it's a fundamental lack of professional judgment that would be problematic regardless of available technology.

So let's stop having hysterical debates and start getting good at using these tools responsibly. The future is coming whether we're ready or not.

P.S. - I didn't use AI to write this article from nothing, but I sure used Ai to assist me, spell-check and search engines. If that bothers you, the problem isn't my process - it's your understanding of how professional work actually happens.