ChatGPT 5 dropped on Friday, finally putting an end to the rumors and all that guessing. The rollout is happening right now, and, as OpenAI promises, this version is faster, smarter, and more accurate than ever before.

The big change? OpenAI added a new algorithm that automatically picks the best model for the task, ensuring everything runs smoothly and efficiently.

Now, here’s where it gets interesting: OpenAI’s putting a special focus on healthcare. We didn’t see that kind of focus before, so before we report the regular stuff (heads up, we’re letting a week pass by so the buzz will calm down and the real stuff will come up-wink, wink), we’re focusing on that for now.

Apparently, the new model’s ability to break down complex medical terms and explain lab results is drawing a lot of attention. And that actually checked out.

We were a bit mmmm at the beginning, but then we said, “What the heck, let’s see what this is all about.”

What OpenAI Is Saying About ChatGPT5

OpenAI has said that ChatGPT 5 is their fastest, most accurate model yet. It comes with improvements in reasoning, performance, and the ability to select the best algorithm for each task, whether it’s answering questions or solving complex problems. They’ve designed ChatGPT 5 to be a powerful tool for a wide range of uses, from coding to healthcare, providing clear, context-aware responses.

“We’ve designed ChatGPT 5 to be our most powerful, accurate, and fastest model yet. It’s built to handle a variety of tasks and assist in fields like healthcare, where precision is key.” Sam Altman, CEO of OpenAI

In healthcare, OpenAI emphasizes that ChatGPT 5 can simplify medical terminology, explain lab results, and offer general health advice. While it’s not meant to replace healthcare professionals, the company sees it as a tool to help users better understand their health information.

What It Means (In Human Words)

ChatGPT 5 is faster, smarter, and more reliable. The big claim? It can understand and respond to a wider range of questions with improved accuracy. It’ll even choose the best algorithm for the task based on your inputs. So, even if you don’t know which approach to take, ChatGPT 5 has got your back, making sure you get the best results.

When it comes to healthcare, this means you can use it to make sense of medical terms, understand lab results, or get basic health advice-without needing to wait for a doctor’s appointment.

But just a reminder: ChatGPT 5 is here to help explain things, not replace your doctor. It’s all about making healthcare info more accessible, so you don’t feel lost when dealing with confusing medical jargon. You can ask it to explain terms or symptoms, and it’ll break it down in a way that’s easy to understand, helping you feel more informed before you reach out to a professional.

It’s a step toward making healthcare knowledge more reachable for everyone, but the serious stuff? That’s still for the doctors

Connecting the Dots

We were initially skeptical about using a large language model (LLM) like ChatGPT 5 for medical inquiries. The idea of asking an AI about health concerns felt a bit out there. But we couldn't ignore what we found.

Talking to LLMs About Medical Stuff: Is It a Thing?

Yes, it is. And it's growing.

-

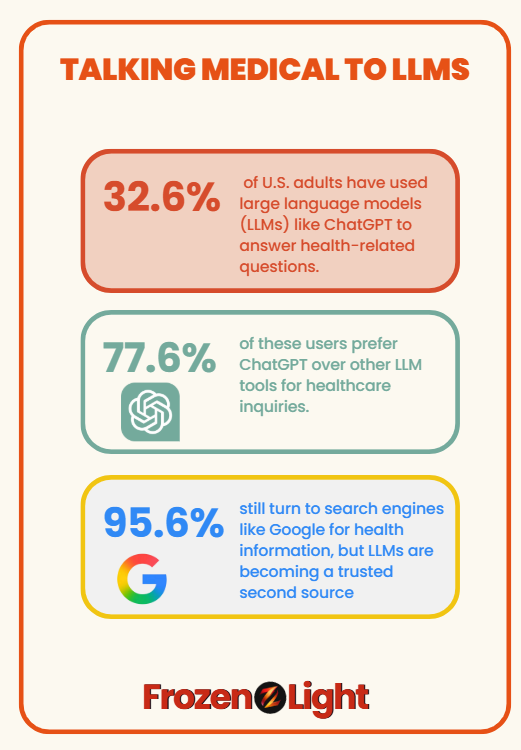

32.6% of U.S. adults have used large language models (LLMs) like ChatGPT to answer health-related questions.

-

77.6% of these users prefer ChatGPT over other LLM tools for healthcare inquiries.

-

95.6% still turn to search engines like Google for health information, but LLMs are becoming a trusted second source.

These numbers show a steady increase in the adoption of LLMs for medical assistance, indicating a growing trust in AI for healthcare.

Sources:

-

Laypeople's Use of and Attitudes Toward Large Language Models for Health Information

-

Understanding the Concerns and Choices of the Public When Using Large Language Models for Healthcare

Can We Measure It?

When it comes to checking the accuracy of information provided by AI models like ChatGPT, it's not always as straightforward as saying an answer is "right" or "wrong." Several tools and benchmarks are used to evaluate the performance of models, especially when they're answering complex questions, such as healthcare-related queries.

Key Benchmarks for Healthcare:-

MedQA: A dataset specifically designed to assess the ability of language models to answer healthcare-related questions. It helps measure how well the model can understand medical topics and provide reliable answers.

-

USMLE (United States Medical Licensing Examination): This is a standardized exam for medical professionals. When models like ChatGPT are tested on USMLE practice questions, it helps assess their ability to handle complex clinical knowledge.

A critical metric for AI, especially in healthcare, is the hallucination rate-the frequency at which the model provides false or made-up information. A lower hallucination rate means the model is more likely to provide accurate, trustworthy information. For ChatGPT, this rate is actively tracked, especially in sensitive fields like healthcare, to ensure the model isn’t giving misleading or incorrect advice.

How Did ChatGPT 5 Do?

When it comes to ChatGPT 5’s performance in healthcare, specific results on benchmarks like MedQA and USMLE have not been publicly disclosed yet. While OpenAI has shared general improvements, such as enhanced accuracy and reasoning, the detailed performance data specific to healthcare-related tasks remains unavailable.

However, OpenAI has made some general statements about ChatGPT 5’s capabilities:

-

Improved Reasoning: ChatGPT 5 is designed to provide more accurate answers, making it better equipped for understanding complex queries, including those in healthcare.

-

Better Algorithm Selection: The model can automatically select the most appropriate algorithm for different tasks, ensuring smoother performance across various applications, including health-related inquiries.

-

Reduced Hallucination Rate: Early reports indicate that ChatGPT 5 has a reduced hallucination rate of around 1.6%, meaning it's less likely to generate false or fabricated information compared to previous versions.

While these improvements indicate that ChatGPT 5 will likely perform better in healthcare-related queries than its predecessors, we don’t have specific accuracy numbers just yet.

Comparison: How Did ChatGPT 4 Perform Against Other Leading LLMs?

When it comes to healthcare-related tasks, how does ChatGPT 4 stack up against other leading models in terms of accuracy? Here’s a comparison of ChatGPT 4 with other top-performing models, based on their performance on benchmarks like MedQA and USMLE.

|

Model |

MedQA Accuracy |

USMLE Step 1 Accuracy |

USMLE Step 2 Accuracy |

Hallucination Rate |

Key Highlights |

|

ChatGPT 4 |

60–70% |

55.8% |

57.7% |

1.8% |

Strong performance in answering simpler medical queries but struggles with more complex scenarios. |

|

ChatGPT 5 |

Not Disclosed |

Not Disclosed |

Not Disclosed |

Approx. 1.6% |

Expected improvements in accuracy and reasoning, but no specific healthcare benchmarks released yet. |

|

Gemini 2.0 |

91.1% |

Not specified |

Not specified |

<1% |

Top performer, especially in specialized areas like ophthalmology, with very low hallucination rate. |

|

Claude 3 |

86.15% |

Not specified |

Not specified |

<1% |

Known for providing clear, concise medical explanations and solid general medical knowledge. |

|

Grok |

85.5% |

Not specified |

Not specified |

<1% |

Performs well in diagnostic-related queries with a solid performance in clinical reasoning. |

Key Takeaways:

-

ChatGPT 4 shows a solid performance with 60-70% accuracy on the MedQA dataset and 55.8% on USMLE Step 1, but it does struggle with more complex medical queries.

-

ChatGPT 5, while showing improvements in reasoning, does not yet have specific performance data for healthcare benchmarks like MedQA and USMLE.

-

Gemini 2.0 leads the pack with 91.1% accuracy on MedQA, making it a top performer for healthcare-related tasks.

-

Claude 3 and Grok also show strong results, though ChatGPT 5 could likely improve upon their capabilities with its enhanced reasoning abilities.

Sources:

-

MedQA Benchmark

Med-Gemini??? – Is There Such a Thing?

Yes, Google has developed a specialized AI model for healthcare called Med-Gemini. Med-Gemini is a family of large, multimodal models fine-tuned specifically for medical applications, building upon Google's Gemini models. These models are designed to handle complex medical tasks that require advanced reasoning and the ability to interpret multimodal data, including text, images, videos, and electronic health records (EHRs).

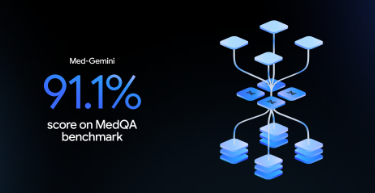

Med-Gemini has demonstrated state-of-the-art performance on several medical benchmarks. Notably, it achieved 91.1% accuracy on the MedQA (USMLE-style) benchmark, surpassing previous models like Med-PaLM 2 by 4.6%. Additionally, Med-Gemini has shown strong performance in tasks such as medical text summarization, referral letter generation, and interpreting complex medical images like 3D scans.

While Med-Gemini is a specialized model from Google designed for healthcare, it is still in the research phase and not yet available for public or clinical use. Though it is not ready for widespread deployment, Med-Gemini has already shown impressive results in healthcare-related benchmarks, outperforming ChatGPT in some areas.

Med-Gemini represents a significant advancement in AI's potential to assist in medical applications. Google continues to collaborate with the medical community to test and refine these models, ensuring their safety and reliability before real-world deployment.

source: Google's blog

source: Google's blog

Bottom Line

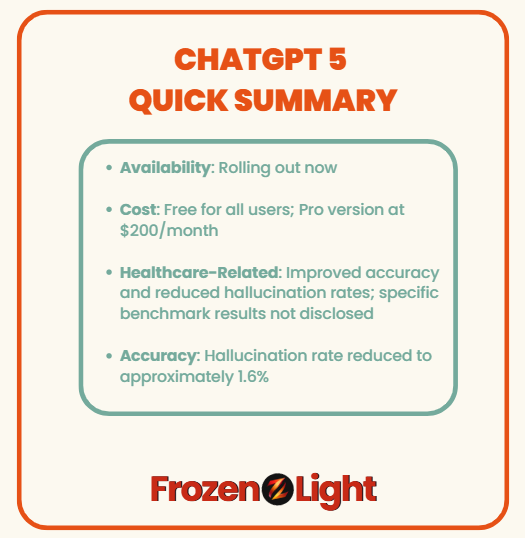

ChatGPT 5 - Key takeaways

-

Availability: Rolling out now

-

Cost: Free for all users; Pro version at $200/month

-

Healthcare-Related: Improved accuracy and reduced hallucination rates; specific benchmark results not disclosed

-

Accuracy: Hallucination rate reduced to approximately 1.6%

Prompt It Up

Knowing how to ask is part of the magic when it comes to LLMs. If you wish to consult with your ChatGPT 5 or any LLM for healthcare-related topics, we’ve drafted a prompt to help you get the best results.

Copy & paste this prompt, fill in the details, it will work for ChatGPT 5 or any LLM of your choice:

Prompt for ChatGPT 5 (or any LLM):

“I’m looking for information about [medical conditions, symptoms, or treatment]. Can you explain what it is, what causes it, and how it is typically diagnosed? Please include details on common treatment options, including both medical and lifestyle recommendations.

-

Specific details about my situation: [e.g., age, gender, existing health conditions]

-

Current symptoms or concerns: [e.g., chest pain, fatigue, dizziness]

-

Recent test results (if available): [e.g., blood pressure, lab results]

-

Other relevant information: [e.g., family medical history, allergies, medications]

Please provide information in a way that’s easy to understand for someone without a medical background.

Push it forward:

Additionally, include any relevant links or references to credible sources so I can read, understand, and verify the information.”

By filling in the details above, you’ll help the LLM provide you with clearer, more accurate insights into your medical situation. This prompt also asks for relevant links to help you verify the provided information. Always remember, while ChatGPT or any LLM can help explain things, it’s not a replacement for a consultation with a healthcare professional.

Frozen Light Team Perspective

At Frozen Light, we usually believe in doing what you do best, but when we looked at ChatGPT’s healthcare benchmarks, we found it puzzling. It didn’t seem like healthcare was its strong suit… at least not until now.

So why the sudden focus on healthcare? Is it just about closing the gap?

ChatGPT is the most used, adopted, and accessible LLM, with a milestone of 700 million active weekly users. But what we missed is that this push isn’t just about catching up with competitors, like Google-it’s about realizing that LLMs are now part of healthcare conversations. People are actively asking for medical advice from LLMs, and that trend is growing fast.

We want to say good on OpenAI for recognizing this need and stepping up to meet it. As the most widely used model, they’re trying to provide what their users need, even if the results aren't perfect yet. ChatGPT is trying, and for that, we give credit.

But here’s the thing: when it comes to benchmarks, ChatGPT 4 scored the lowest, and that's not just an opinion-it's in the numbers. OpenAI is the only one to share results across all three tests, so we know there’s a big gap. Med-Gemini and Grok have taken the lead in medical accuracy, and even with improvements in ChatGPT 5, there’s more room for growth.

Friendly reminder: LLMs, even ones as powerful as ChatGPT, don’t replace healthcare professionals. If you’re using them for medical purposes, cross-reference the information and don't rely solely on one model. We want to remind medical professionals to use multiple sources to provide solid, reliable answers.

On a personal note: We’ve all been there-Googling symptoms and freaking out. Imagine doing that with an LLM! While we’re all for exploring new tech, let’s not forget that sometimes the drama is real, and a little medical check-in with an expert never hurt. 🙂