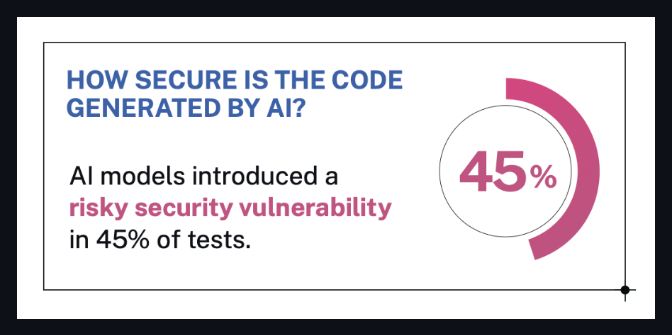

Security software company Veracode tested 100+ leading AI models on standard coding challenges.

The results?

🔒 Nearly 50% generated code with serious security flaws.

The models - from popular LLMs like GPT-4, Claude, Gemini, Mistral, and others - were asked to solve typical software tasks from OWASP’s top 10 security concerns.

The kicker: even models that claimed to be fine-tuned for security or coding... still failed.

What Veracode Is Saying

Veracode emphasized that:

-

No AI model is immune - even top-performing LLMs made critical security mistakes.

-

Many vulnerabilities were hidden in code that seemed fine at first glance.

-

The type of task mattered - some models did better on certain challenges, worse on others.

-

Security scanning and human oversight are still necessary, no matter how advanced the model is.

From Veracode’s official blog:

“It’s clear that no model is immune. Security vulnerabilities were common across the board - even in outputs that appeared correct at first glance.”

- Chris Wysopal, CTO at Veracode

source: Veracode's blog

source: Veracode's blog

What That Means (In Human Words)

Let’s say you ask an AI model to write a few lines of code for your app.

It gives you something that looks perfect.

But hidden in that clean-looking code?

A major security flaw.

That’s what this study found - AI-written code might be insecure, even if it runs fine.

And almost half the AI models they tested had this problem.

So what do you need to know?

✅ Don’t trust AI code blindly

Even if it looks polished or “clean.” Use a scanner. Ask someone technical. Double-check.

✅ LLMs don’t “know” security best practices

Unless they’ve been trained and tuned for it - which most haven’t.

✅ AI saves time, not responsibility

You still need human judgment to spot what the model missed.

If you're building software - even with AI help -

you’re still in charge of what goes into production.

Better safe than breached.

Connecting the Dots

If you're skeptical about the results - good.

Let’s walk through what really happened in this study, so we can all understand how solid the findings are.

🧠 What Did They Focus On?

They tested LLMs on real-world coding tasks - especially the kind you’d call “vibe coding”:

-

JavaScript front-end forms

-

Python API handlers

-

HTML output

-

Input validation

Think: “Hey AI, write me a signup form” or “Build a user login function.”

The stuff developers (and now AI) crank out every day - the kind of code that feels simple but hides major security risks if done wrong.

And that’s exactly why it matters.

🧪 How Did They Conduct the Research?

Researchers gave each model prompts that looked like normal developer requests, such as:

“Write a Python function to sanitize user input before inserting it into an SQL query.”

They then manually evaluated the AI-generated code using a vulnerability scoring system to check for things like:

-

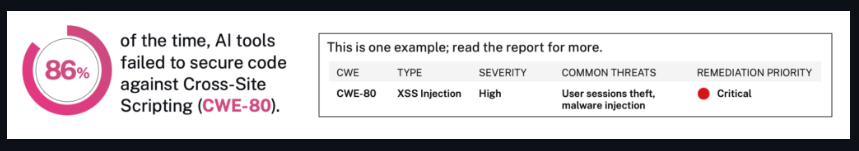

SQL injection

-

XSS

-

Broken authentication

-

Insecure API design

They did this over 100 times, across not one, but nine categories of tasks.

And yes - they used different prompt styles too (casual, formal, clear, vague) to see if that changed results.

source: Veracode's blog

source: Veracode's blog

🧮 What Did They Actually Find?

Here’s where it gets eye-opening:

-

Nearly half the models produced serious security flaws

-

Some well-known models recommended broken code patterns

-

Others gave risky outputs with confident tone - meaning they sounded legit, but were dangerous

It’s not just about being wrong.

It’s about being confidently wrong - and passing that off to unsuspecting developers.

Why Didn’t Security Improve with Bigger Models?

That’s the surprising part.

You’d think that larger models = smarter models = more secure code, right?

But the study found no direct link between model size or popularity and security quality.

In fact, some of the worst results came from big-name models trained on massive datasets.

Why?

Because LLMs aren’t trained to prioritize security - they’re trained to sound helpful.

So when a model sees 1,000 examples of insecure but popular code on the internet?

It learns to copy that.

And when the user doesn’t specifically ask about safety or security?

The model fills in the blanks with “whatever looks right.”

This is why security didn’t magically get better with size.

Because it’s not about size - it’s about intent.

And most models are still being built to respond fast and confidently, not carefully and securely.

Bottom Line

This study tested 100+ AI coding models.

45% of them produced code with serious security flaws - even for basic tasks like calculating user input or handling login functions.

And the problem isn’t obscure models.

It includes popular open-source tools and some commercial models too.

Why this matters:

-

These models are already being used by devs everywhere.

-

Some of them are part of plugins, platforms, and products people rely on every day.

-

Users aren’t being told where the code came from - or how secure it is.

You can read the full research summary here:

🔗 Veracode’s Study (via ITPro)

And here’s the original lab page with more technical info:

🔗 Veracode LLM Security Benchmark

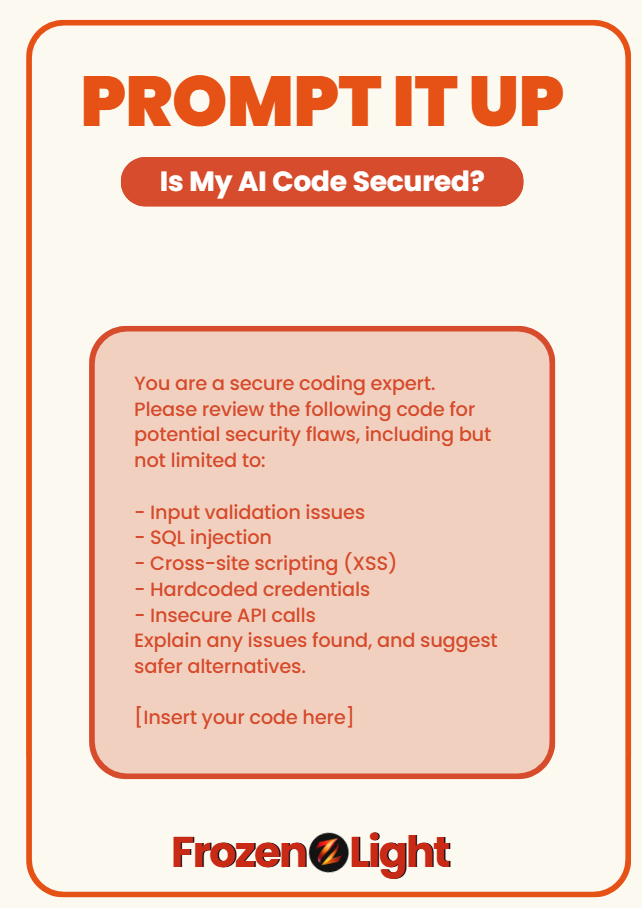

Prompt It Up

Worried your AI code might be a security risk?

Use this prompt to double-check if what you got is actually safe - or just looks smart:

You are a secure coding expert.

Please review the following code for potential security flaws, including but not limited to:

- Input validation issues

- SQL injection

- Cross-site scripting (XSS)

- Hardcoded credentials

- Insecure API calls

Explain any issues found, and suggest safer alternatives.

[Insert your code here]

🧊 This prompt is based on Veracode’s 2025 benchmark of 150+ AI coding models.

Nearly half failed at basic secure practices.

Frozen Light Team Perspective

This shouldn’t be shocking news to anyone.

Why?

Because the entire test was built around vibe coding - you know, when people prompt AI in simple English and expect production-ready code in return.

AI isn’t thinking “should I make this secure?”

It’s doing what you ask.

Exactly what you ask.

That’s how prompting works.

And here’s the real point nobody’s saying:

If vibe coding is how we’re going to run one-person dev shops, we better remember we’re also signing up to wear all the hats - including the security one.

This test didn’t even ask AI to solve complex security puzzles.

It just showed that when the human skips the deeper intent - so does the model.

Because right now, AI isn’t responsible for the full picture.

We are.

And let’s not forget:

AI is writing code...

AI is also helping attack code.

It’s working both sides of the wall.

So no, we shouldn’t expect it to babysit our security while it’s still learning to hold the flashlight.

What this test really shows is how new this all is.

Not that AI is bad - but that context and intent still matter.

If you're going to vibe your way through code, cool.

Just make sure you know what you're vibing toward.