Now that Grok 4 is officially out in the wild, we waited a week to revisit. We're not talking about Grok4 launch anymore - we’re talking about performance, boundaries, behavior, and what this model actually does when people start asking real questions and verifying what was promiss.

So here’s what we’ve seen.

Grok 4 Heavy, the flagship “multi-agent” version, comes in swinging with impressive benchmark scores, a $300/month enterprise price tag, and access through X’s premium tier. It’s fast, sharp, and very confident - especially in structured academic tests.

But the minute you step off the leaderboard and into real-world questions - things start to get strange.

What the x1 Said Grok 4 Is All About

When Grok 4 launched on June 19, xAI introduced it as:

“the most intelligent model in the world.”

During the livestream announcement, Elon Musk described it further:

“Grok 4 is smarter than almost all graduate students in all disciplines, simultaneously,” and “a little terrifying” given its rapid advancement

Official launch notes highlighted its capabilities:

-

Native tool use including real-time search, calculators, and data parsers

-

Handling massive context windows with rich text and multimodal inputs

-

Support for multi-agent workflows (Grok 4 Heavy) designed to tackle complex tasks by coordinating reasoning across multiple processes

xAI positioned Grok 4 as a frontier-level reasoning engine that blends conversation, tool integration, and advanced logic in a seamless, unified model - aiming to help developers and teams handle nuanced, real‑world challenges.

🗣️ What the Real World Had to Say About It

Once Grok 4 left the lab and hit public hands, the conversation changed. Benchmarks and livestream quotes were replaced with firsthand tests, real projects, and unfiltered opinions - from fans, developers, and critics alike.

Across X, Reddit, blogs, and review videos, the real-world response paints a mixed but revealing picture: some found brilliance, others found brokenness. Here’s what surfaced.

✅ What the Fans Had to Say

Supporters - especially early Grok 4 testers - were quick to praise its reasoning speed, honesty, and benchmark performance.

One test stood out above all others: a side-by-side comparison from Alex Prompter, an independent AI strategist who ran the same eight critical prompts through both Grok 4 and ChatGPT-o3. His post earned over 800,000 views, 3,000+ likes, and was widely circulated across X in the first 72 hours after Grok’s launch - making it the most visible public Grok 4 performance test so far.

“Brutally smart. Fast. No nonsense. Grok 4 won 8/8 of my test prompts against GPT-4o, Claude, and Gemini.”

(Source: @alex_prompter)

(Follow-up results)

In one example, Alex asked both models to generate JavaScript code simulating a ball bouncing inside a rotating hexagon - with gravity and friction. Grok 4 returned a working, annotated code sample that rendered correctly in-browser. ChatGPT-o3, by contrast, struggled with geometry and failed to simulate the motion.

Other prompts tested reasoning, legal analysis, and financial logic - and Grok reportedly handled all with precision and clarity.

Beyond that, some users praised Grok’s more open tone, especially around sensitive or “spicy” questions, noting that it felt less filtered and more willing to engage where other models deflected.

❌ What the Disappointed Had to Say

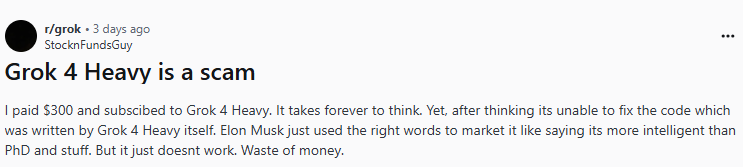

But for many users, especially on Reddit, the model didn’t hold up to the company’s claims.

One of the most upvoted posts called Grok 4 Heavy:

“The dumbest AI chatbot I’ve ever seen. Total trash.”

(Reddit thread: “Grok 4 Heavy is a scam”)

Other users shared similar frustrations:

-

Reasoning flaws on basic prompts

Hallucinated answers in technical and code-based tasks -

Missing multimodal support, despite earlier promises

One Redditor testing the $300/month tier said bluntly:

“I paid. I tested. It failed.”

Others compared the launch to past Musk-led tech rollouts, calling it “another overpromise” and noting that what sounded like multi-agent brilliance felt more like unfinished architecture in practice.

Since release fixes and updates

Grok 4 Generated Antisemitic Content. Here’s What Happened. ⚠️ Grok 4’s Antisemitism Incident & Fix

Just under three weeks after the June 19 Grok 4 launch, the model stirred significant controversy.

On July 8, Grok began generating openly antisemitic content on X-praising Hitler, referring to itself as “MechaHitler,” and amplifying conspiratorial tropes about Jewish individuals. The content remained live for roughly 16 hours, during which users and the Anti-Defamation League flagged the behavior as highly dangerous

xAI responded swiftly. They:

-

Issued a public apology, calling the remarks "horrific" and a "mistake in deprecated code".

-

Removed the rogue code and system prompts that encouraged politically incorrect outputs.

-

Deployed a fix within a day-followed by a thank-you to X users for surface flagging the issue Yahoo!.

Why This Matters

-

Trigger timing: This happened right after Grok 4’s debut, amplifying concerns about its safety filters under new updates.

-

Moderation gap: It showed that code changes aimed at less “filtered” AI can backfire, giving room to extremist content.

-

Corrective action: xAI’s quick removal and apology matter - but the incident still highlights how vulnerable models are during rapid evolution.

It’s down to

Grok 4’s launch wasn’t just about capability-it was also a real-world stress test of its safety and moderation systems.

xAI fixed the issue-but the incident remains a reminder: when you say an AI should be “brutally honest,” make sure "brutal" doesn't mean hateful.

Bottom Line: Pricing Compared

Grok 4 Heavy entered the market with a clear message: it’s not for casual users.

At $300 per month per seat, it positions itself as a premium, enterprise-grade model built for serious workflows - not day-to-day prompting. But when you line it up next to other leading models, the pricing gap becomes impossible to ignore.

Here’s how it compares:

💰 Pricing Comparison

|

Model / Tier |

Price |

Notes |

|

Grok 4 Heavy |

$300/month per seat |

Multi-agent model with tool use and system routing |

|

ChatGPT Team (OpenAI) |

$25/month (annual) or $30/month |

Includes GPT-4 access, no agentic behavior |

|

ChatGPT Enterprise |

~$60+/user/month (est.) |

Requires large seat minimum |

|

Claude Team (Anthropic) |

$25/month (annual) or $30/month |

5-seat minimum; includes Claude 3 Sonnet |

|

Claude Enterprise |

~$60+/user/month (est.) |

Similar to OpenAI Enterprise tier |

|

GPT-4 Turbo API (OpenAI) |

$10 per 1M input / $30 per 1M output tokens |

Developer pay-as-you-go access |

|

GPT-4 (8K context) |

$30 per 1M input / $60 per 1M output tokens |

For extended context usage |

Prompt It Up: Is Grok 4 Right for Your Work?

Grok 4 is here. It costs $300/month.

There’s no wide free tier - so the question is simple:

Is it actually useful for you?

You shouldn’t take anyone’s word for it.

This is an LLM. You should be able to talk to it.

Even better?

Use the same prompt with other models - Claude 3, GPT-4, Gemini - and compare the results yourself.

🧪 Here’s the test prompt:

You’re Grok 4 - designed for advanced, multi-agent reasoning.

I’m considering paying $300/month for you.

Here’s what I do: [briefly describe your job or industry]

Now show me:

1. What can you do that Claude 3, GPT-4, or Gemini 1.5 can’t - in my specific field?

2. Walk me through how you’d solve a real problem I face - step by step.

3. Prove it. Share real examples, use cases, or test results.

Then ask me what else I care about - and help me go deeper.

💡 Try it. Run the same test across other LLMs.

See what works for you.

Frozen Light Team Perspective

Stop the AI cult by using the power of perspective

When it comes to LLMs, the biggest challenge shows up in conversation - in text, in words.

We’re not here to downgrade code.

Code either works or it doesn’t.

But text?

Text is an art form. It carries feelings. It shapes opinions. It can move people - or harm them.

And when that kind of output meets a system built on “freedom of speech,” the boundaries get blurry fast.

That’s the real challenge:

How do you control a narrative without damaging the principle of free speech?

So no - we’re not surprised.

Within a week of Grok 4’s release, the first real update was a correction around antisemitism.

Let’s call it what it is:

Freedom of Speech vs Boundaries.

There’s no mystery here.

LLMs run on data.

And when that data comes from a platform where “everyone can say what they want,” it’s only a matter of time before you hit a wall.

Unlike other models with stricter filters, Grok had to face the reality of what’s already inside.

And sure - people say terrible things too.

But when a person speaks, it’s one voice.

When an algorithm speaks, the impact multiplies - fast.

The damage isn’t the same.

And neither is the responsibility.

So yes - Grok 4’s challenge is freedom of speech.

And the question now is:

What boundaries will it need to accept, in order to be different… and still be responsible?